Consider In Your Deepseek Skills However By no means Cease Improving

- 작성일25-03-07 21:07

- 조회9

- 작성자Maude

From the DeepSeek v3 technical report. These benchmark results highlight DeepSeek Coder V2's competitive edge in both coding and mathematical reasoning duties. Users have famous that DeepSeek’s integration of chat and coding functionalities offers a unique benefit over fashions like Claude and Sonnet. Accessing DeepSeek by its API gives users with larger control over the mannequin's conduct. The mannequin's efficiency in mathematical reasoning is particularly impressive. The research represents an essential step ahead in the continued efforts to develop giant language models that can successfully tackle complicated mathematical issues and reasoning duties. Step 5: Enjoy a safe, Free DeepSeek Ai Chat, and open supply with reasoning capabilities! DeepSeek, a Chinese AI agency, is disrupting the trade with its low-price, open supply giant language fashions, difficult U.S. ’s equivalent to 65% of the annual U.S. For instance, the U.S. Supporting BF16 and FP16 data varieties, it utilizes a paged kvcache block dimension of 64, reaching up to 3000 GB/s for memory-sure operations and 580 TFLOPS for computation-sure operations on H800 SXM5 GPUs.

From the DeepSeek v3 technical report. These benchmark results highlight DeepSeek Coder V2's competitive edge in both coding and mathematical reasoning duties. Users have famous that DeepSeek’s integration of chat and coding functionalities offers a unique benefit over fashions like Claude and Sonnet. Accessing DeepSeek by its API gives users with larger control over the mannequin's conduct. The mannequin's efficiency in mathematical reasoning is particularly impressive. The research represents an essential step ahead in the continued efforts to develop giant language models that can successfully tackle complicated mathematical issues and reasoning duties. Step 5: Enjoy a safe, Free DeepSeek Ai Chat, and open supply with reasoning capabilities! DeepSeek, a Chinese AI agency, is disrupting the trade with its low-price, open supply giant language fashions, difficult U.S. ’s equivalent to 65% of the annual U.S. For instance, the U.S. Supporting BF16 and FP16 data varieties, it utilizes a paged kvcache block dimension of 64, reaching up to 3000 GB/s for memory-sure operations and 580 TFLOPS for computation-sure operations on H800 SXM5 GPUs.

Unity Catalog easy - simply configure your mannequin dimension (on this case, 8B) and the model identify. Both versions of the model feature a formidable 128K token context window, allowing for the processing of in depth code snippets and complex issues. Sometimes problems are solved by a single monolithic genius, but this is usually not the best wager. The researchers evaluated their model on the Lean 4 miniF2F and FIMO benchmarks, which include lots of of mathematical problems. This means that these weights take up a lot less reminiscence during inferencing DeepSeek to practice the mannequin on a limited GPU Memory finances. Then there are companies like Nvidia, IBM, and Intel that promote the AI hardware used to power programs and prepare fashions. If the fashions are running regionally, there remains a ridiculously small chance that somehow, they've added a back door. Actually, using Ollama anyone can try running these models domestically with acceptable efficiency, even on Laptops that shouldn't have a GPU. Another methodology users attempt is "hypnosis" or repetitive prompting-a method the place the AI is steadily led into producing more and more unrestricted responses by means of subtle prompt changes.

Feedback from users on platforms like Reddit highlights the strengths of DeepSeek 2.5 in comparison with other fashions. OpenAI confirmed to Axios that it had gathered "some evidence" of "distillation" from China-based mostly groups and is "aware of and reviewing indications that DeepSeek could have inappropriately distilled" AI models. Data continues to be king: Companies like OpenAI and Google have access to huge proprietary datasets, giving them a significant edge in coaching superior fashions. HLT: Is that underlying lawsuit by the new York Times in opposition to OpenAI nonetheless pending? Startups building AI-pushed solutions without being shackled to costly API subscriptions from OpenAI or Google. At most these corporations are six months ahead, and perhaps it’s only OpenAI that's forward at all. It’s additionally interesting to notice that OpenAI’s feedback appear (probably deliberately) obscure on the kind(s) of IP right they intend to rely on in this dispute. Another factor to note is that like every other AI mannequin, DeepSeek’s choices aren’t immune to moral and bias-associated challenges based mostly on the datasets they're educated on. Those who use the R1 mannequin in DeepSeek’s app can even see its "thought" process because it solutions questions. To download from the principle branch, enter TheBloke/deepseek-coder-33B-instruct-GPTQ within the "Download mannequin" box.

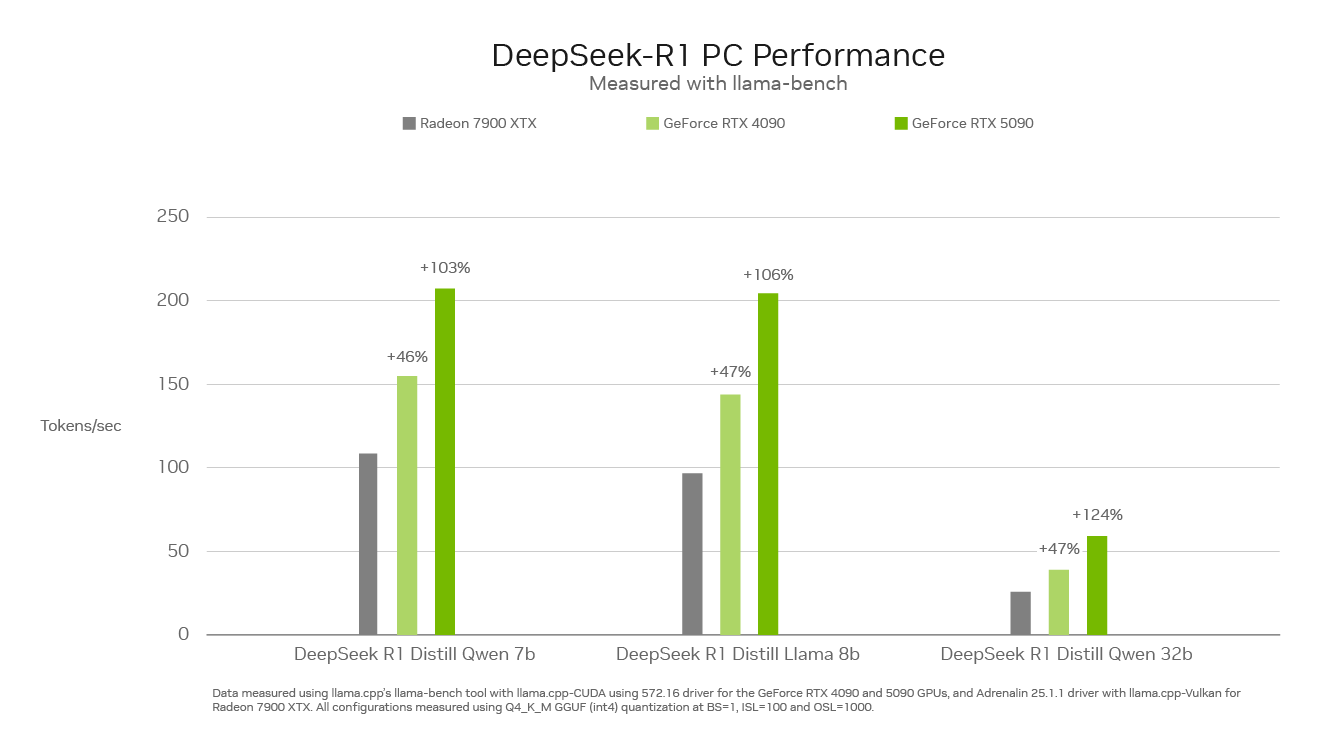

There’s a sense in which you want a reasoning model to have a excessive inference value, since you want a great reasoning model to be able to usefully assume virtually indefinitely. During Wednesday’s earnings call, CEO Jensen Huang stated that demand for AI inference is accelerating as new AI models emerge, giving a shoutout to DeepSeek’s R1. DeepSeek Coder V2 has demonstrated distinctive efficiency across various benchmarks, typically surpassing closed-supply fashions like GPT-four Turbo, Claude 3 Opus, and Gemini 1.5 Pro in coding and math-particular tasks. When evaluating DeepSeek 2.5 with different fashions resembling GPT-4o and Claude 3.5 Sonnet, it turns into clear that neither GPT nor Claude comes anywhere close to the price-effectiveness of DeepSeek online. The DeepSeek fashions, typically ignored in comparison to GPT-4o and Claude 3.5 Sonnet, have gained first rate momentum previously few months. DeepSeek, like most AI models, has content moderation filters in place to prevent the era of NSFW content material. Performance Metrics: Outperforms its predecessors in several benchmarks, resembling AlpacaEval and HumanEval, showcasing improvements in instruction following and code era. Next, we checked out code on the perform/methodology degree to see if there is an observable distinction when things like boilerplate code, imports, licence statements should not present in our inputs.

등록된 댓글

등록된 댓글이 없습니다.