Top 10 Tricks To Grow Your Deepseek Ai

- 작성일25-03-06 17:26

- 조회2

- 작성자Robt

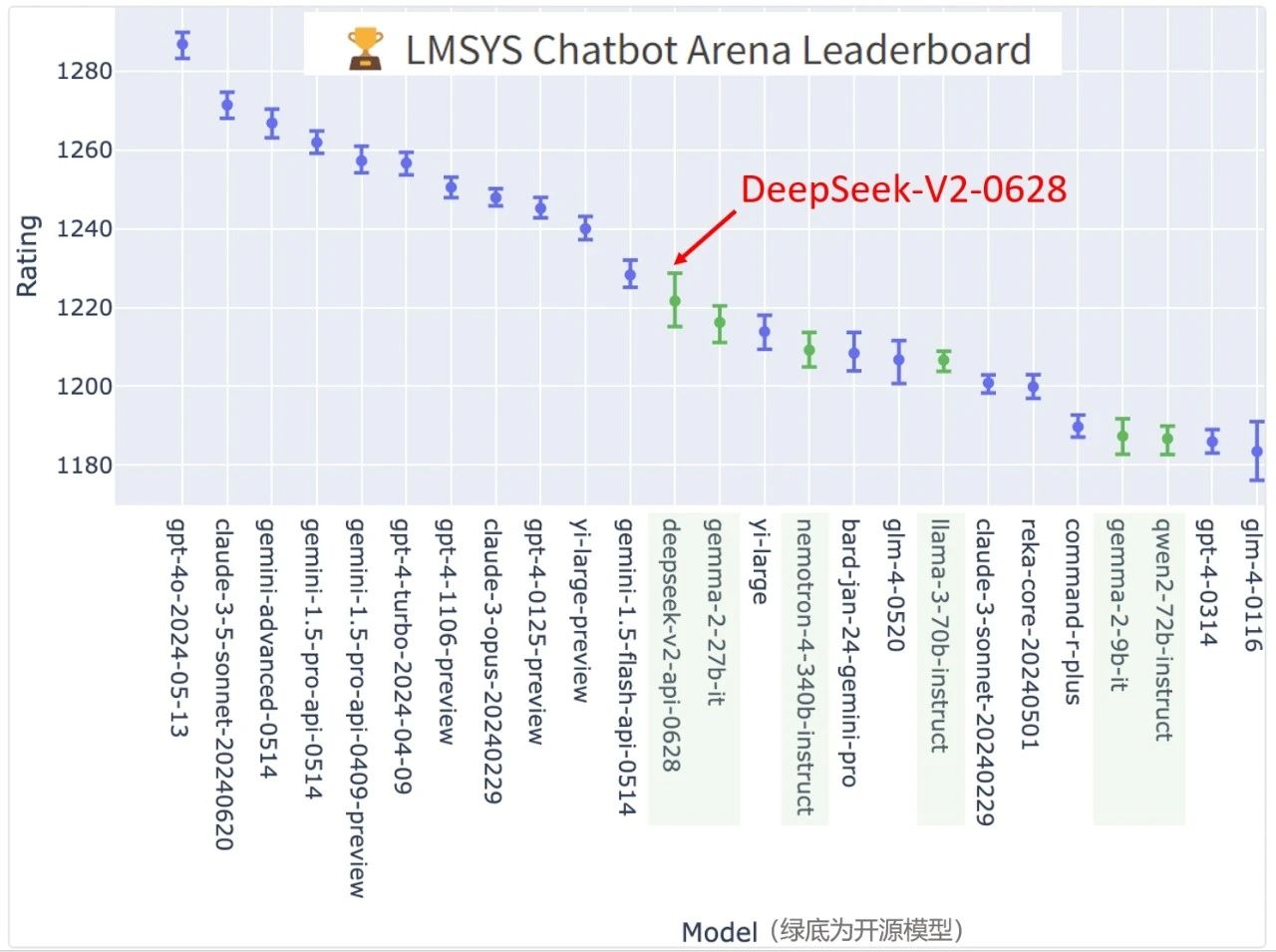

DeepSeek-V3 demonstrates competitive efficiency, standing on par with top-tier models reminiscent of LLaMA-3.1-405B, GPT-4o, and Claude-Sonnet 3.5, whereas considerably outperforming Qwen2.5 72B. Moreover, DeepSeek-V3 excels in MMLU-Pro, a more challenging instructional information benchmark, the place it closely trails Claude-Sonnet 3.5. On MMLU-Redux, a refined version of MMLU with corrected labels, DeepSeek-V3 surpasses its peers. DeepSeek excels in predictive analytics by leveraging historical data to forecast future tendencies. Because Nvidia’s Chinese opponents are reduce off from international HBM however Nvidia’s H20 chip is not, Nvidia is prone to have a big efficiency benefit for the foreseeable future. Within the early phases - starting within the US-China commerce wars of Trump’s first presidency - the technology switch perspective was dominant: the prevailing concept was that Chinese corporations needed to first acquire basic applied sciences from the West, leveraging this know-how to scale up manufacturing and outcompete global rivals. Today, a principal international policy problem for the nation is harnessing emerging applied sciences and understanding their implications quicker and better than our adversaries. As for English and Chinese language benchmarks, DeepSeek-V3-Base exhibits aggressive or higher efficiency, and is particularly good on BBH, MMLU-sequence, DROP, C-Eval, CMMLU, and CCPM. 2) Compared with Qwen2.5 72B Base, the state-of-the-artwork Chinese open-supply mannequin, with only half of the activated parameters, DeepSeek-V3-Base additionally demonstrates remarkable advantages, especially on English, multilingual, code, and math benchmarks.

As for Chinese benchmarks, apart from CMMLU, a Chinese multi-topic a number of-alternative task, DeepSeek-V3-Base also reveals better performance than Qwen2.5 72B. (3) Compared with LLaMA-3.1 405B Base, the most important open-supply model with eleven instances the activated parameters, DeepSeek-V3-Base also exhibits significantly better performance on multilingual, code, and math benchmarks. Next, we checked out code at the perform/methodology degree to see if there's an observable distinction when issues like boilerplate code, imports, licence statements aren't present in our inputs. Wall Street continues to see DeepSeek as a risk to U.S. And right here lies maybe the largest impression of DeepSeek. The key distinction between auxiliary-loss-free balancing and sequence-smart auxiliary loss lies in their balancing scope: batch-sensible versus sequence-smart. The experimental results show that, when attaining an identical degree of batch-smart load steadiness, the batch-wise auxiliary loss may achieve comparable mannequin efficiency to the auxiliary-loss-free technique. As illustrated in Figure 9, we observe that the auxiliary-loss-free mannequin demonstrates greater professional specialization patterns as anticipated. Specifically, while the R1-generated information demonstrates strong accuracy, it suffers from issues such as overthinking, poor formatting, and excessive length.

As for Chinese benchmarks, apart from CMMLU, a Chinese multi-topic a number of-alternative task, DeepSeek-V3-Base also reveals better performance than Qwen2.5 72B. (3) Compared with LLaMA-3.1 405B Base, the most important open-supply model with eleven instances the activated parameters, DeepSeek-V3-Base also exhibits significantly better performance on multilingual, code, and math benchmarks. Next, we checked out code at the perform/methodology degree to see if there's an observable distinction when issues like boilerplate code, imports, licence statements aren't present in our inputs. Wall Street continues to see DeepSeek as a risk to U.S. And right here lies maybe the largest impression of DeepSeek. The key distinction between auxiliary-loss-free balancing and sequence-smart auxiliary loss lies in their balancing scope: batch-sensible versus sequence-smart. The experimental results show that, when attaining an identical degree of batch-smart load steadiness, the batch-wise auxiliary loss may achieve comparable mannequin efficiency to the auxiliary-loss-free technique. As illustrated in Figure 9, we observe that the auxiliary-loss-free mannequin demonstrates greater professional specialization patterns as anticipated. Specifically, while the R1-generated information demonstrates strong accuracy, it suffers from issues such as overthinking, poor formatting, and excessive length.

For non-reasoning data, resembling artistic writing, role-play, and easy question answering, we make the most of DeepSeek-V2.5 to generate responses and enlist human annotators to verify the accuracy and correctness of the information. For example, sure math issues have deterministic results, and we require the model to offer the final reply within a chosen format (e.g., in a box), permitting us to apply rules to confirm the correctness. However, some consultants have questioned the accuracy of DeepSeek's claims about chips and the prices involved in training its AI fashions. DeepSeek's arrival on the scene has upended many assumptions we've got long held about what it takes to develop AI. This echoed DeepSeek's personal claims concerning the R1 model. DeepSeek claims its LLM beat OpenAI's reasoning model o1 on superior math and coding tests (AIME 2024, MATH-500, SWE-bench Verified) and earned simply under o1 on another programming benchmark (Codeforces), graduate-stage science (GPQA Diamond), and basic information (MMLU). SWE-Bench verified is evaluated using the agentless framework (Xia et al., 2024). We use the "diff" format to guage the Aider-associated benchmarks. We utilize the Zero-Eval prompt format (Lin, 2024) for MMLU-Redux in a zero-shot setting.

We use CoT and non-CoT methods to guage model efficiency on LiveCodeBench, the place the information are collected from August 2024 to November 2024. The Codeforces dataset is measured utilizing the percentage of rivals. Note that throughout inference, we immediately discard the MTP module, so the inference prices of the compared models are exactly the same. Their hyper-parameters to control the energy of auxiliary losses are the same as DeepSeek-V2-Lite and DeepSeek-V2, respectively. The platform hit the 10 million person mark in simply 20 days - half the time it took ChatGPT to achieve the identical milestone. In distinction, DeepSeek says it made its new model for lower than $6 million. A latest evaluation by Wiseapp Retail discovered that DeepSeek was used by about 1.2 million smartphone users in South Korea through the fourth week of January, rising as the second-most-common AI mannequin behind ChatGPT. DeepSeek could analyze huge swaths of software program code and infrastructure configurations to uncover potential exploits faster than human groups or less advanced AI systems. LangChain Integration: Because of DeepSeek-V2’s compatibility with OpenAI, teams can simply integrate the mannequin with LangChain.

If you have any concerns regarding where and the best ways to make use of Deepseek Online chat, you could contact us at the web site.

등록된 댓글

등록된 댓글이 없습니다.